PART THREE: EVGA GeForce GTX 680

Mac Edition versus the 'Sharks'

Posted Friday, April 26th, 2013 by rob-ART morgan, mad scientist

In response to popular demand, here is the EVGA GeForce GTX 680 Mac Edition compared to non-standard Mac Pro GPUs like the GeForce 580 Classified and GeForce 680 Classified.

GRAPH LEGEND

GTX 690 = ASUS NVIDIA GTX 690 (4G GDDR5)

GTX 680C = EVGA NVDIA GTX 680 Classified (4G GDDR5)

GTX 680 Mac = EVGA NVIDIA GeForce GTX 680 Mac Edition (2G VRAM)

GTX 580C = EVGA NVIDIA GTX 580 Classified (3G GDDR5)*

GTX 580E = EVGA NVIDIA GTX 580 (3G GDDR5)*

GTX 570 = EVGA NVIDIA GTX 570 (2.5G GDDR5)*

QK5000 = NVIDIA Quadro K5000 (4G GDDR5)

Q6000 = NVIDIA Quadro 6000 (6G GDDR5)*

Radeon 7970G = Sapphire AMD Radeon HD 7970 Gigahertz (3G GDDR5)

Radeon 7970F = HIS AMD Radeon HD 7970 925MHz (3G GDDR5)*

Radeon 7950 = Sapphire AMD Radeon HD 7950 GPU (3G GDDR5)

Radeon 5870 = Apple factory AMD Radeon HD 5870 GPU (1G GDDR5)

All Mac Pro GPUs above were in a in a 'Mid 2010' Mac Pro 3.33GHz Hex-Core running OS X 10.8.3.

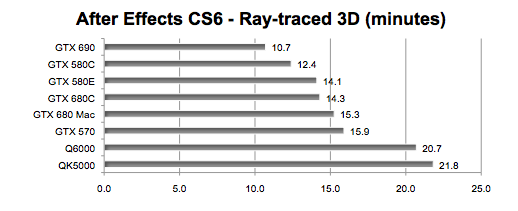

After Effects CS6 Ray-traced 3D render of an animated robot. Thankfully, AE measures and displays the render time. Since it can take many minutes, that's a blessing. Technically, you can render this project with an AMD GPU, but that could take literally hours. Recently we tried to render with a Radeon HD 5870. After 8 hours, it was only three-quarters done. If you plan to use the Ray-traced 3D render function often, a CUDA capable NVIDIA GPU is the preferred tool. (FASTEST GPU has the LOWEST time in MINUTES.)

NOTE: The AE Ray-traced 3D animation we refer to at "robot" was provided courtesy of Juan Salvo and Danny Princz. Danny sent us a link to a compilation of render times featuring up to three GPUs.

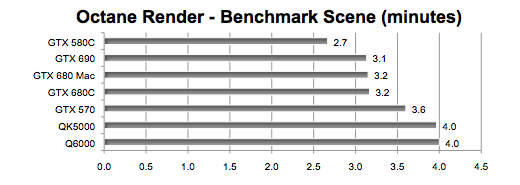

Octane Render is a "GPU only" standalone renderer that can process scenes created in Maya, ArchiCAD, Cinema 4D, etc. -- and does so in a fraction of the time it takes with a CPU based renderer. However, it only runs only on CUDA capable NVIDIA graphics cards. The DEMO comes with a scene called octane_benchmark.ocs. For our test we clicked on RenderTarget PT (Path Tracing). The render time is tracked and displayed in minutes and seconds. (FASTEST GPU has the LOWEST time in MINUTES.)

CUDA-Z is a utility that collects information from CUDA enabled NVDIA GPUs such as core clock speed and memory capacity. It also can be used to measure performance. Here's the test results for both Single Precision and Double Precision Floating Point calculations. Note the shuffle in ranking that happens in the shift from Single to Double Precision. (LONGEST bar indicates FASTEST in Gigaflops per Second.)

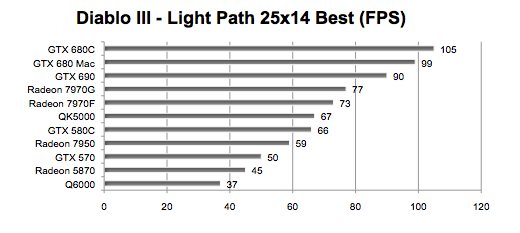

Diablo III -- "Evil is in its prime." Our lonely traveler is on the path to adventure stopping occasionally to zap the undead. Settings are 2560x1440 Fullscreen, vSync OFF, Best Quality, Anti Aliasing enabled. (LONGEST bar indicates FASTEST in frames per second.)

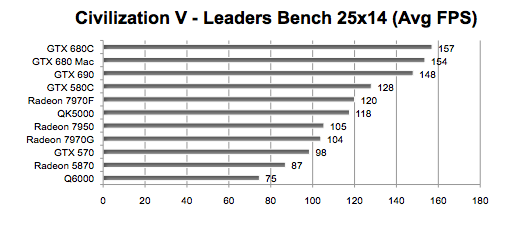

Civilization V is now on Steam. By entering "-LeaderBenchmark" in the Properties > Set Launch Options, it runs through multiple animated sequences of the various World Leaders. Though it does not simulate real game play, it is GPU intensive. Resolution was 2560x1440 with FullScreen ON. Quality settings were on "Medium" with one exception -- Texture Quality was set to "High." vSync and High Detail Strategic View were both OFF. (LONGER bar means FASTER.)

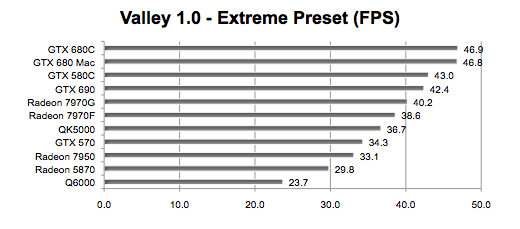

Unigine's Valley "flies" through forest-covered valley surrounded by vast mountains. It's a birdŐs-eye view of 64 million meters of extremely detailed terrain down to every leaf and flower petal. It features advanced visual technologies: dynamic sky, volumetric clouds, sun shafts, DOF, ambient occlusion. (It is cross platform. For Windows users it is a test of DirectX.) We used Extreme preset: 1600x900 windowed resolution with 8x Anti-aliasing, Ultra Quality for Shaders and Textures, and with Occlusion, Refraction, and Volumetric Shadows enabled. (LONGER means bar means FASTER AVERAGE frames per second.)

FurMark is a Cross-Platform, OpenGL-based GPU stress test (also called GPU burn-in test). It makes it possible to push the GPU to the max in order to test the stability of the graphics card leading to maximal GPU temperatures (and therefore fan speeds.) ThatŐs why FurMark is often used by overclockers and graphics cards fanatics to validate an overclocking, to test a new VGA cooler or to check the max power consumption of a video card. FurMark is now included in a collection of GPU stress tests called GPUTest.

REACTION

When it comes to CUDA accelerated apps, the EVGA GeForce GTX 680 Mac Edition is beaten by its more muscular 'siblings,' the GTX 690 and GTX 580. It's only slightly slower than the overclocked 680 Classified with twice the amount of video memory.

With the exception of the FurMark test, the GTX 680 Mac Edition was second only to the 680 Classified in the OpenGL tests.

COMING SOON

We are working on more challenging Photoshop, Premiere Pro, Final Cut Pro, X-Plane, and Cinema 4D tests. Stay tuned for those results in a future posting.